When building Django applications, there will come a time when you want to run some functionalities asynchronously or automatically. For example, you might want to run a script that sends users an email message every time they log in. It wouldn't be effective to do this manually. Additionally, maybe you want to perform a calculation on a daily basis. It wouldn't make sense to manually run the code every day; what would happen if you get sick or are too busy?

Do you know how to solve problems like these when working on a Django application? If not, stick around and follow the tutorial provided in this article. The repository for this article can be found on GitHub.

Prerequisites

To follow this tutorial, the following is required:

- A basic understanding of Django

What are Job Queues and Workers?

Before getting to what job queues are, let's first learn about what a job is. A job is a task/function/class set to be executed at a particular point in time. It is possible to have multiple jobs that run sequentially as required; these are called job queues.

We can consider a worker to be a server or processor available to handle one job at a time. If we have 4 workers, it means 4 jobs/functions can be handled at a time. A worker process pulls a task off the job/task queue.

Use-Case Job Queues and Workers in Django Applications

Below are some use cases where it would make sense to use job queues and workers when building a Django application.

- Assume that you have designed a system to watch for incoming files, such as résumés from potential employees. After someone submits a résumé, the system alerts your organization’s human resources department. This is a very good use case for job queues and workers.

- Let's say that you are building an e-commerce site. You will need an automated system that sends an email to users when their products are shipped. You can set up a job that detects when the handler indicates that the product is being shipped and then sends an automated email message to the particular user. Without setting the job queues, the handler of the product will have to send the email directly, which is inefficient.

- Developers can use job queues and workers to set automated alerts and establish dynamic solutions for an imminent database breach.

- A price tracker will need a system that gets the data daily (or on any time schedule you prefer) and sends a message about the tracked goods to the user. We can automate this process and prevent the user from having to actively run the code when they want to track prices.

- Offload tasks that take a long time to run.

Set Up a Base Project

In this section, we will set up a simple Django application with a view that delays other operations in the view from running. With this application, I will illustrate how job queues and workers can offload a view that takes too long to come up.

By offloading the said view, other views can run properly without the extended wait time.

Start by installing Django with the following command:

$ pip install django

Next, run the command below to start a new Django project:

$ django-admin startproject project .

Then, run the command below to create the app:

$ python3 manage.py startapp sleep_app

Next, add the app you just created to the settings.py file:

INSTALLED_APPS = [

'django.contrib.admin',

'django.contrib.auth',

'django.contrib.contenttypes',

'django.contrib.sessions',

'django.contrib.messages',

'django.contrib.staticfiles',

'sleep_app' #new

]

Now, in the views.py of sleep_app, paste the following code:

from django.http import JsonResponse

from time import sleep

def slow_view(request):

data = {

"name": "Muhammed Ali"

}

sleep(7)

return JsonResponse(data)

Paste the code below to update the urls.py file of the project to display the view in the browser.

from django.contrib import admin

from django.urls import path

from sleep_app.views import slow_view

urlpatterns = [

path('admin/', admin.site.urls),

path("sleep/", slow_view),

]

Now, when you run you project with python manage.py runserver and open http://127.0.0.1:8000/sleep/ on your browser, you’ll find that it takes 7 minutes before you can see the content of the site. This indicates a slow process that should be offloaded so that users can access the site content as fast as possible.

How to Set Up Job Queues and Workers for Django Applications

In this section, you will learn how to use Django Q and Celery to set up job queues and workers to offload the slow process we created above.

How to Use Django Q

Django Q is an application used to manage job/task queues and workers in a Django application. It does this by using Python multiprocessing.

To set up job queues, you will need a message broker, which is a system that translates the formal messaging protocol of the sender(Django) to the formal messaging protocol of the receiver (Django Q). The message broker we will use in this article is Redis, but there are others you can choose from. Redis will get the information about the functions to be executed and put it in a queue for Django Q to receive.

We will use the Redis service provided by Heroku as our message broker.

Set Up Heroku Redis on a Django Project

For this step, make sure that you already have Heroku CLI installed in your local machine and have created an account on Heroku. Then, go to your terminal, navigate to the root of your project, and run the following commands.

git init

heroku create --region eu --addons=heroku-redis

The first command initializes a git repo for the project so that our project can connect to Heroku, and the second command creates a new app on Heroku with a Redis add-on. The --region flag specifies the region in which you want your application to be created.

Next, install Django Q and Redis with pip install django-q redis==3.5.3 and add "django_q" to INSTALLED_APPS in you Django settings file.

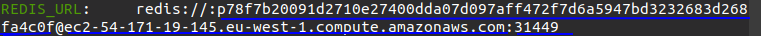

Then, run heroku config and Heroku will prompt the Redis credentials. We will need the REDIS_URL later.

In the settings.py file, paste the code below to initialize the settings for Django Q.

Q_CLUSTER = {

'name': 'myproject',

'workers': 4, # number of workers. It Defaults to number of CPU of your local machine.

'recycle': 500,

'timeout': 60,

'compress': True,

'save_limit': 250,

'queue_limit': 500,

'cpu_affinity': 1,

'label': 'Django Q',

'redis': {

'host': 'ec2-54-171-19-145.eu-west-1.compute.amazonaws.com', #Gotten from REDIS_URL

'password': 'p78f7b20091d2710e27400dda07d097aff472f7d6a5947bd3232683d268fa4c0f', #Gotten from REDIS_URL

'port': 31449, #Gotten from REDIS_URL

'db': 0, }

}

Update the parts of the code above retrieved from the REDIS_URL with your REDIS_URL details.

Note: For security purposes, in a real-life project, don't leave all the credentials in the project file.

You can learn more about other configuration settings in the documentation.

Next, run python manage.py migrate so that Django Q can apply its migrations.

Offloading the Sleep Function to Django Q

To offload the sleep(7) function, we will need to import the async_task function. It takes 3 arguments;

- The function you want to offload, which, in this case, is

sleep(). - The

arguments you want to pass into the offloaded function, which, in this case, is10. - The function you want to run after the worker executes the job in the job queue is

hook(); this function takes intaskas an argument.

Paste the code below into your views.py file.

from django.http import JsonResponse

from django_q.tasks import async_task

def Slow_view(request):

data = {

"name": "Muhammed Ali"

}

async_task("sleep_app.q_services.py.sleepy_func", 7, hook="sleep_app.q_services.py.hook_funcs")

return JsonResponse(data)

Next, in the sleep_app folder, create a new file named q_services.py (file name is arbitrary), which will contain all the functions required by async_task().

In q_services.py, paste the following code.

from time import sleep

def hook_funcs(task):

print("The task result is: ", task.result)

def sleepy_func(sec):

# This function will be taken to the job queue

sleep(sec)

print ("sleepy function ran")

Next, make sure your project is running, and then run python manage.py qcluster in another terminal to spin-up the Django Q cluster for your application.

Open [http://127.0.0.1:8000/sleep](http://127.0.0.1:8000/sleep), and you’ll see that the page comes up immediately, showing that sleep(7) is being handle by Django Q.

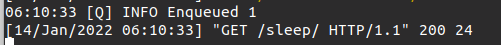

On the Django server, you’ll see that a job has been enqueued and is being handled by the workers.

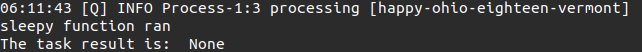

Then, after 7 seconds, on the Django Q cluster, you should see something like the following image, showing that the job is done.

How to Use Celery

Celery is a distributed task queue that allows you to send jobs to the queue for execution. Celery provides dedicated workers on the lookout for new tasks to execute in the job queue.

Like Django Q, we will also need a message broker for this to work. We will need to create another app on Heroku and generate new credentials.

To illustrate this, I will create another sleep_app like we did previously.

$ python3 manage.py startapp sleep_app2

Next, add "sleep_app2" to INSTALLED_APPS.

Then, in the views.py of sleep_app2, paste the following code:

from django.http import JsonResponse

from time import sleep

def slow_view2(request):

data = {

"name": "Muhammed Ali"

}

sleep(7)

return JsonResponse(data)

Paste the code below to update the urls.py file of the project to display the view in the browser.

...

from sleep_app.views2 import slow_view2 #new

urlpatterns = [

...

path("sleep2/", slow_view2), #new

]

First, install Celery by running pip install celery.

Next, in your project folder, create a file with name celery.py and paste the following code:

import os

from celery import Celery

# Set the default Django settings module for the 'celery' program.

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'project.settings') #path to your settings file

app = Celery('slow_view2')

# Using a string here means the worker doesn't have to serialize

# the configuration object to child processes.

# - namespace='CELERY' means all celery-related configuration keys

# should have a `CELERY_` prefix.

app.config_from_object('django.conf:settings', namespace='CELERY')

# Load task modules from all registered Django apps.

app.autodiscover_tasks()

@app.task(bind=True)

def debug_task(self):

print(f'Request: {self.request!r}')

Then, in project/__init__.py, paste the following code to import the Celery app we just created into Django.

from .celery import app as celery_app

__all__ = ('celery_app',)

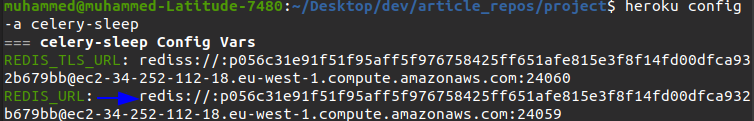

Create another project in Heroku by running heroku create --region eu --addons=heroku-redis <name-of-project>, and then run heroku config -a <name-of-project> to get your REDIS_URL.

Next, go to your settings.py file and paste the following code at the button of the file.

CELERY_BROKER_URL = 'redis://:p056c31e91f51f95aff5f976758425ff651afe815e3f8f14fd00dfca932b679bb@ec2-34-252-112-18.eu-west-1.compute.amazonaws.com:24059' #the REDIS_URL you just got

CELERY_ACCEPT_CONTENT = ['json']

CELERY_TASK_SERRIALIZER = 'json'

In the sleep_app2 folder, create a new file with the name celery_services.py (file name is arbitrary) and paste the following code.

from celery import shared_task #used to create task

from time import sleep

@shared_task

def sleepy_func(sec):

# This function will be taken to the job queue

sleep(sec)

Next, update your views.py with the following code:

from django.http import JsonResponse

from .celery_services import sleepy_func

def slow_view2(request):

data = {

"name": "Muhammed Ali"

}

sleepy_func.delay(7)

return JsonResponse(data)

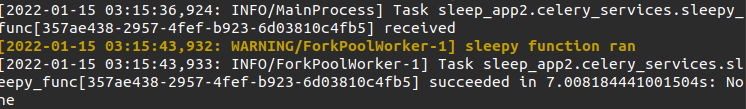

Then, run your Django server, and on another terminal window, run the Celery server with celery -A project worker -l info.

Open the URL for sleep_app2, and you’ll see that the page comes up faster. Finally, go over to the Celery server 7 seconds later, and you will see that our job was executed successfully.

Job Queues in Production

Job queues work a little differently when working in production. This is because there are some extra settings and configurations that go into running the job queue servers on the cloud, depending on the platform you are using for deployment.

If you are trying to deploy your Django project with Celery to Heroku, Heroku provides a solution for that.

Apart from the option above, some cloud service providers offer services for working with job queues. For example, AWS has AWS Batch, and Heroku has Heroku Scheduler. You can look into those when working on the cloud.

Conclusion

This article explained what job queues and workers are all about, as well as how they work and their functions. We also built a simple application that delays the display of the webpage to provide a practical example of how job queues work.

We used Django Q and Celery to remove the slow process from the view, so it can work in the background and not slow down the view.

Hopefully, with this information, you will start considering these technologies to make your projects operate as fast as possible.